How can AI help advance science

5 researchers reveal the opportunities and challenges of using AI

AI is playing a critical role in supporting scientific discovery, transforming the way research is conducted by introducing new possibilities. From enhancing cancer treatment and supporting drug discovery to revolutionizing library services, the opportunities and challenges AI presents are shaping the future of science. Here, experts in different fields share their insights on the transformative potential of AI.

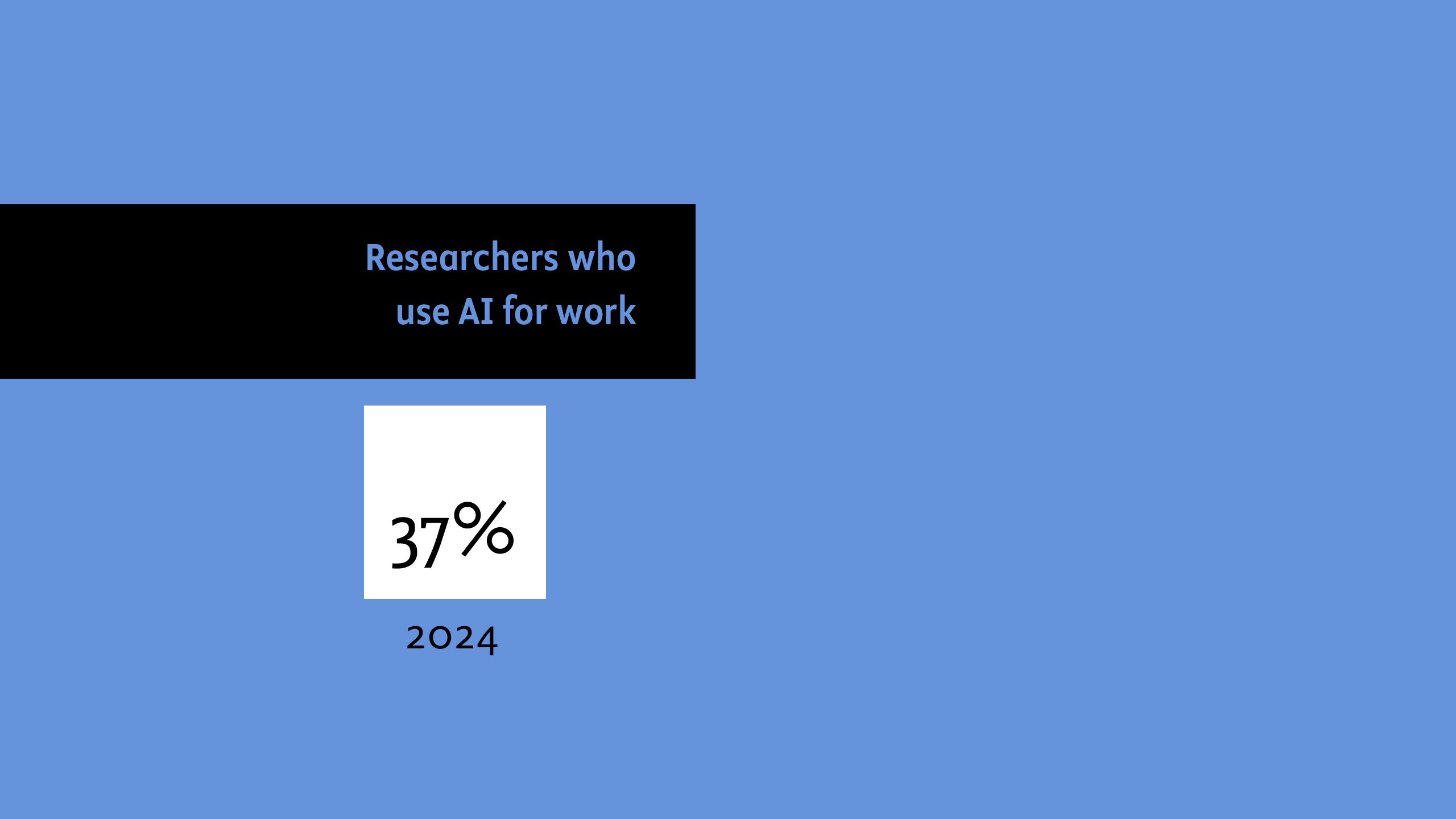

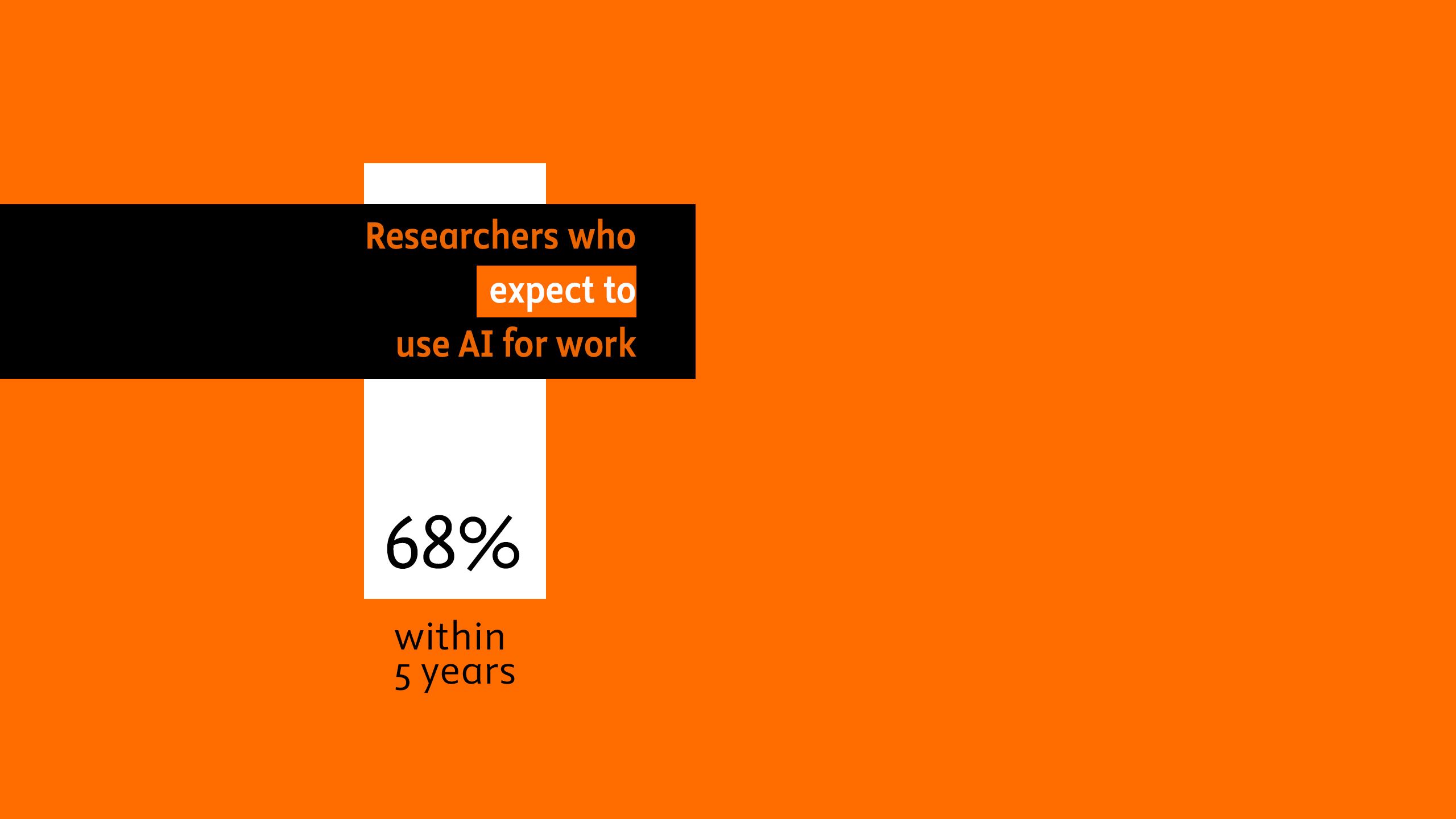

Read their stories here. And see what researchers and clinicians worldwide are saying about AI in our report Insights 2024: Attitudes Toward AI.

When your research affects cancer patients, reliable AI is critical

Dr Engie El Sawaf knows the difference research can make to people’s lives.

As a Pharmacology Lecturer Assistant at Future University in Egypt, she researches neuropathy, a common side effect of chemotherapy that can be so severe that patients choose to stop cancer treatment.

“It’s extremely hard for people — it can have a huge impact on quality of life,” she said. “So if you can find solutions to decrease the pain, you can make a big difference to them during their treatment. It gives me motivation — the idea that I can do something to help these people.”

“I recently lost my father to lung cancer, and reducing his pain was my family’s top priority. Hearing from Engie — one of many researchers who have advanced the research that greatly helped my father — is really motivating.”

The ever-evolving nature of Engie’s field means she often needs to bring herself up to speed quickly in new areas of research. She’s found that generative AI tools, such as Scopus AI, can help bring her to the right information faster:

I’ve been working with Scopus AI for a couple of months, and it’s been great. I’d previously used ChatGPT, but it’s not a tool for scientific literature review. When I heard there was something new based on Scopus — which is the database I trust most when I’m doing a literature review — I started using it a lot.

There’s a lot of poor information that gets generated by other, less specialized tools. Even though they’re written in a way that seems true, the results are often very inaccurate or outright false. Using Scopus AI, you get information that is generated from abstracts that come from peer-reviewed journals. So mostly you get trusted information.

Engie uses Scopus AI to search the literature and generate summaries that provide an understanding of new areas:

I’ll do a research query on Scopus to get a list of research articles and review articles and so on. At the same time, I will run a query on Scopus AI to get a summary. That way, before I go through all the review articles and everything on the Scopus database, I can examine the summary and understand what the key points to follow up on are.

A Catalyst for Resilient Food Systems

Artificial intelligence is not just a tool for efficiency; it’s a game-changer in addressing global food challenges. Abhishek Roy, Global R&D Leader of AI at Cargill, emphasizes that AI enables faster, more informed decisions, helping to tackle issues like feeding a growing population, optimizing supply chains, and adapting to changing consumption patterns.

Cargill’s innovative use of AI includes tools like "Ask Emma," which generated 140 new snack concepts in days, and "Project Galleon," which analyzes poultry gut microbiota to improve feed additives. These targeted applications demonstrate how small, data-driven interventions can create significant impact, translating even a 2% efficiency gain into hundreds of millions in value.

Roy envisions a future where R&D professionals shift from creators to curators, leveraging AI to generate thousands of possibilities and refining the best options. This collaborative approach merges human expertise with machine intelligence, accelerating innovation while addressing real-world challenges like food accessibility and system resilience.

By integrating AI with domain-specific knowledge, such as climate and chemistry, the potential for breakthroughs in food systems is immense. As Roy puts it, “We’re not just using AI to solve technical problems; we’re using it to tackle real global challenges that impact people daily.”

Leveraging AI for pharma and the public

As Head of Ontologies for SciBite, Dr Jane Lomax has an ambitious goal: to encode scientific knowledge into software so researchers can find and connect the information they need when they need it:

I believe ontologies have a fundamental role in leveraging the power of large language models — for the benefit of pharma and the public at large.

Based in Cambridge, UK, SciBite joined Elsevier in 2020. Their experts combine semantic AI with text analytics and data enrichment tools to help R&D professionals make faster, more effective decisions. As Jane explains:

We get people and machines to use the same language to talk about scientific things.

To do this, they take unstructured content and turns it into ordered machine-readable data for scientific discovery in the life sciences. “And this involves working with our expert scientific curators to encode their expertise into our software.”

“I believe ontologies have a fundamental role in leveraging the power of large language models — for the benefit of pharma and the public at large.”

With a PhD in Population Genetics and Parasitology and over 20 years of experience with FAIR data and ontologies — “basically, ontologies are a codification of scientific facts as we understand them” — Jane is a champion of those creating ontologies. “These are the people doing the foundational work, and they’re doing it on a shoestring,” she says.

She also believes ontologies offer a methodology to leverage the power of new AI technologies such as large language models (LLMs):

While LLMs can bring in their natural language and summarizing skills, ontologies can provide the backbone of scientific knowledge that the LLM can use, as well as making the output explainable and reproducible.

As with all AI, however, human input is crucial:

While AI technologies are super-powerful, the output must still be verified as truth. Ontologies represent the truth as agreed upon by humans: that something is this type of thing, and it relates to these other types of things. So if you can feed that into your AI, you get the best of both worlds.

“My nickname is the AI librarian.”

Harnessing AI for library services

Dr Borui Zhang wasn’t thinking about a job in library services after earning her PhD in Theoretical Linguistics with a minor in Computer Science at the University of Minnesota in 2021. While there, she focused on applying machine learning to modeling low-resource languages. Chance intervened when her housemate, an academic librarian, shared a job listing for a new role at the University of Florida George A Smathers Libraries and encouraged her to apply:

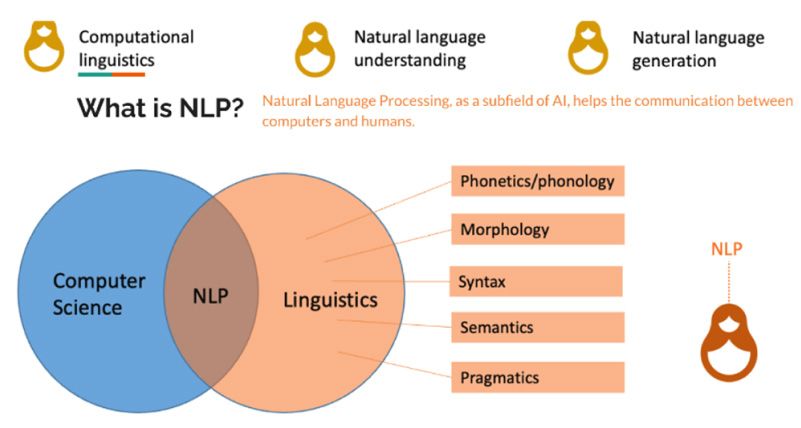

Though my title is Natural Language Processing Specialist, my nickname is ‘the AI librarian’ But the nature of this job is really connected to my linguistics training.

A slide from Dr Borui Zhang's Intro to NLP Lecture: "Demonstrating the Connection of Five Basic Linguistic Components to NLP."

A slide from Dr Borui Zhang's Intro to NLP Lecture: "Demonstrating the Connection of Five Basic Linguistic Components to NLP."

Borui (“Bri,” as she’s known at UF) was hired as part of a campuswide initiative by the University of Florida to invest in building AI skills and education across all faculties.

Her role strengthens the library’s Academic Research Consulting Services team, or ARCS. A dozen library professionals with a wide range of information sciences expertise, the ARCS supports students, researchers and faculty from all areas of the university. They consult on areas such as research data management, digital humanities projects and spatial information services, and now natural language processing and machine learning applications that can empower a variety of research processes.

“AI is just very powerful. It seems to be the trend for every life aspect in the future.”

The techniques of machine learning have become “a great tool for a lot of new researchers to test out research methodologies” that leverage natural language processing. As a result, Borui finds she is supporting researchers in a wide variety of fields. For example, she consulted with a medical research lab to develop a language model that predicts health outcomes for infants, based on using NLP to structure clinical notes.

She has worked on an educational design project with a graduate student in the College of Education for a “hackathon” conference submission and in museum practice with a graduate student-faculty member team from the Florida Museum of Natural History, leveraging large language models (LLMs) and computer vision (CV) techniques to enhance the interpretation of image collections. This diversity makes her job, like many library roles, perpetually interesting because she sees how language — “textual data” — connects her expertise with so many domains.

AI tips for libraries

Dr Borui Zhang offers the following ideas for how libraries can incorporate AI technology in their services:

- Learn and assess the AI-related enhancements in your existing research applications. “I see a lot of liaison librarians helping students and faculty with literature searches, and you can see those search databases have new AI features.”

- Investigate applying available tools to current tasks. For other types of academic library research support, or even the traditional liaison role, they can begin to adopt AI into their existing domain. “The power of AI can very likely be suitable in all these areas.”

- Introduce students and researchers early by discussing AI research techniques in library orientation programs.

What is the real risk of AI?

The arrival of large language AI models like ChatGPT has triggered debates across academia, government, business and the media. Discussion range from their impact on jobs and politics to speculation on the existential threat they could present to humanity.

Michael Wooldridge, Professor of Computer Science at the University of Oxford, described the advent of these large language models (LLMs) as being like “weird things beamed down to Earth that suddenly make possible things in AI that were just philosophical debate until three years ago.”

For Michael, the potential existential threat of AI is overstated, while the actual — even mortal — harms they can already cause are understated. And the potential they offer is tantalizing.

In terms of the big risks around AI, you don’t have to worry about ChatGPT crawling out of the computer and taking over. If you look under the hood of ChatGPT and see how it works, you understand that’s not going to be the case. In all the discussion around existential threat, nobody has ever given me a plausible scenario for how AI might be an existential risk.

“AI puts powerful tools in the hands of potentially bad actors who use it to do bad things. We’re going into elections ... where there is going to be a really big issue around misinformation.”

Instead, Michael sees the focus on this issue as a distraction that can “suck all the air out of the room” and ensure there’s no space to talk about anything else — including more immediate risks:

There’s a danger that nothing else ever gets discussed, even when there are abuses and harms being caused right now, and which will be caused over the next few years, that need consideration, that need attention, regulation and governance.

Michael outlined a scenario where a teenager with medical symptoms might find themselves too embarrassed or awkward to go to a doctor or discuss them with a caregiver. In such a situation, that teenager might go to an LLM for help and receive poor quality advice:

Responsible providers will try to intercept queries like that and say, ‘I don’t do medical advice.’ But it’s not hard to get around those guardrails, and when the technology proliferates, there will be a lot of providers who aren’t responsible. People will die as a consequence because people are releasing products that aren’t properly safeguarded.

That scenario — where technology proliferates without guardrails — is a major risk around AI, Michael argued. AI itself won’t seek to do us harm, but people misusing AI can and do cause harm.

“The British government has been very active in looking at risks around AI and they summarize a scenario they call the Wild West,” he said. In that scenario, AI develops in a way that everyone can get their hands on a LLMs with no guardrails, which then becomes impossible to control.”